Ethereum Mining GPU Performance Roundup

4 stars based on

66 reviews

It looks like you're new here. If you want to get involved, click one of these buttons! March in Mining. I was just curious to know what kind of performances people were getting on the testnet with their setup. Whilst the go implementation seems to be happily mining away, updating the hash rate which varies between and KHsI do get a peer not found error at the very beginning as discussed in the other threadso I'm wondering is it actually mining at all or is this just local?

So my stats might have to be taken with a grain of salt Interesting, I'm also using the go implementation for the hash rate I mentioned. I'm not sure who to ask though, StephanTual maybe? These screenshots were taken within a minute from one another. March edited March Hey guys, I'm somewhat new to all of this, but I figured that I would give you my data.

I am running an Intel iK 3. It's running Ubuntu I'm getting a pretty steady khash There is no GPU miner publicly available is there? If so, I would love to see some specs on GPUs tested. Hey carloscarlsonthanks a lot for the input! Which code base did you use by the way? It was the go implementation, using the command line.

GitHub Link I have not verified such performance, just reporting here what I read. Keep in mind this seems to be a raw measurement done with the ethash benchmarknot a measurement while integrated to run against testnet Though as you say, we don't have much context on the code comment! What does bandwdith impact exactly? Post edited by MarioFortier on March From these numbers I assume the Go implementation is single threaded I don't know the language well enough to be sure.

Can someone familiar with the GO implementation confirm about the CPU mining being currently single threaded or not? Using my modified Ethash benchmark for multithread tests shows a hashrate increase to be a bit less than a multiple of the number of hardware cores as expected.

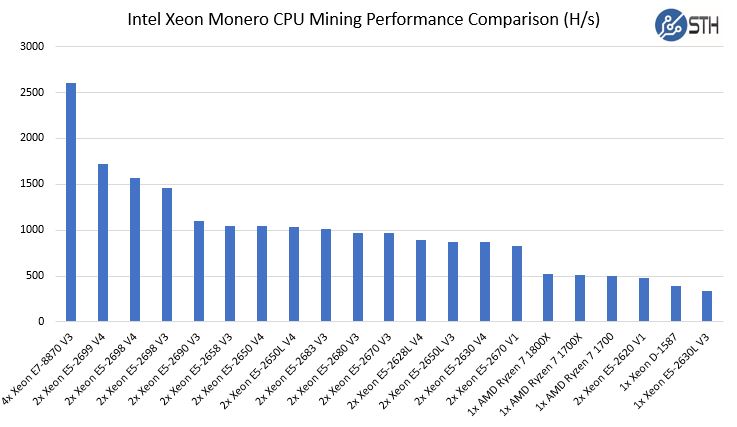

The setup is a dual socket Xeon E v2 2. To me, this is further confirming that CPU mining will not be economically competitive compare to GPU mining rigs as intended by the design. I'm still not seeing any arguments in there to access GPUs installed on the machine. This may be too much to ask but is there someone who can take an educated guess at the setup to purchase to mine Ethereum today and what might benefits there might be for a GPU mining solution in the future?

I am willing to pay a. How much better is it to mine with Ubuntu vs Windows 2. If I have 2MB up and 20MB download connection speed does it make sense to pay an extra 10 dollars a month to get more bandwith to be able to mine more?

Can you give me three side by side comparisons of two medium costs systems and one expensive system? There is no difference 2. Your connection is just fine 3. You basically just need a normal computer, you don't need a top of the line cpu or ram or hdd. If you plan on running more than one gpu, you will need a motherboard that can handle that and usb riser cards. Your expense is going to come down to which gpus you want to run and how many and then that will determine which power supplies you need, which are going to be expensive as well.

If you are going to run multiple cards there are going to be other expenses as well, structure to hold all the parts I just use adjustable shelves from Home Depothow to get all that power to the cards, cooling etc. That's probably conservative too. They could be on equal playing ground depending on how dagger hashimoto turns out. Genoil 0xebbffdab3dfb4d Member Posts: April edited April This is because the full Dagger dataset is loaded onto the GPU. For now that's 1GB, but it will eventually grow to more GB than current cards have.

Thanks for the info Genoil! Which client did you use for mining with that AMD card? I'd like to see what my hashrate is too! Yep the cpp OpenCL benchmark on Win That's really low compared to what MarioFortier is reporting. Genoilthanks for sharing benchmarking information. Assuming your setup has fast memory, then may be the lower CPU single threaded rate you got is related to the compiler not doing auto-vectorization.

I use the Intel compiler on Linux. Using their vector report option I did verify that vectorization was done to match the hardware AVX. I will post my findings here. Anyone found ways to do better with Xeon PHIs? Sign In or Register to comment.