Mip robot black target icon

18 comments

Bitcoin cash on the move again

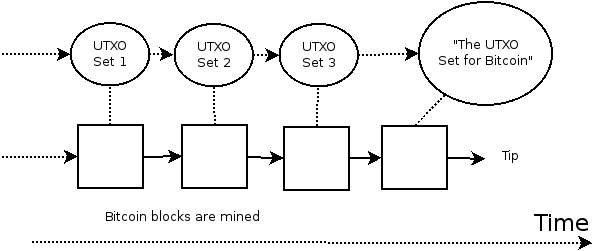

Also posted to the bitcoin-dev mailing list. Mining is a zero-sum game, so the extra latency of not doing so if they do directly impacts your profit margin. Secondly, having possession of the UTXO set is one of the minimum requirements to run a full node; the larger the set the harder it is to run a full node. Currently the maximum size of the UTXO set is unbounded as there is no consensus rule that limits growth, other than the block-size limit itself; as of writing the UTXO set is 1.

However, making any coins unspendable, regardless of age or value, is a politically untenable economic change. A merkle tree committing to the state of all transaction outputs, both spent and unspent, can provide a method of compactly proving the current state of an output. Both the state of a specific item in the MMR, as well the validity of changes to items in the MMR, can be proven with sized proofs consisting of a merkle path to the tip of the tree.

However, the bandwidth overhead per txin is substantial, so a more realistic implementation is be to have a UTXO cache for recent transactions, with TXO commitments acting as a alternate for the rare event that an old txout needs to be spent. We can take advantage of this by delaying the commitment, allowing it to be calculated well in advance of it actually being used, thus changing a latency-critical task into a much easier average throughput problem.

Concretely each block commits to the TXO set state as of block , in other words what the TXO commitment would have been blocks ago, if not for the block delay. Since that commitment only depends on the contents of the blockchain up until block , the contents of any block after are irrelevant to the calculation. V map of txouts definitely known to be unspent.

Appends must be low-latency; removals can be high-latency. In both cases recording an output as spent requires no more than two key: The existing UTXO set requires one key: This impacts bulk verification, e.

That said, TXO commitments provides other possible tradeoffs that can mitigate impact of slower validation throughput, such as skipping validation of old history, as well as fraud proof approaches.

Each TXO MMR state is a modification of the previous one with most information shared, so we an space-efficiently store a large number of TXO commitments states, where each state is a small delta of the previous state, by sharing unchanged data between each state; cycles are impossible in merkelized data structures, so simple reference counting is sufficient for garbage collection.

Data no longer needed can be pruned by dropping it from the database, and unpruned by adding it again. Now suppose state 2 is committed into the blockchain by the most recent block. Suppose recently created txout f is spent. We have all the data required to update the MMR, giving us state 4. It modifies two inner nodes and one leaf node:. If an archived txout is spent requires the transaction to provide the merkle path to the most recently committed TXO, in our case state 2.

If txout b is spent that means the transaction must provide the following data from state When we mark txout b as spent we get state Secondly by now state 3 has been committed into the chain, and transactions that want to spend txouts created as of state 3 must provide a TXO proof consisting of state 3 data. The leaf nodes for outputs g and h, and the inner node above them, are part of state 3, so we prune them:.

Finally, lets put this all together, by spending txouts a, c, and g, and creating three new txouts i, j, and k. State 3 was the most recently committed state, so the transactions spending a and g are providing merkle paths up to it.

This includes part of the state 2 data:. Again, state 4 related data can be pruned. In addition, depending on how the STXO set is implemented may also be able to prune data related to spent txouts after that state, including inner nodes where all txouts under them have been spent more on pruning spent inner nodes later.

A reasonable approach for the low-level cryptography may be to actually treat the two cases differently, with the TXO commitments committing too what data does and does not need to be kept on hand by the UTXO expiration rules. On the other hand, leaving that uncommitted allows for certain types of soft-forks where the protocol is changed to require more data than it previously did. Inner nodes in the TXO MMR can also be pruned if all leafs under them are fully spent; detecting this is easy if the TXO MMR is a merkle-sum tree 4 , with each inner node committing to the sum of the unspent txouts under it.

When a archived txout is spent the transaction is required to provide a merkle path to the most recent TXO commitment. The first challenge can be handled by specialized archival nodes, not unlike how some nodes make transaction data available to wallets via bloom filters or the Electrum protocol. For a miner though not having the data necessary to update the proofs as blocks are found means potentially losing out on transactions fees. So how much extra data is necessary to make this a non-issue?

The maximum number of relevant inner nodes changed is per block, so if there are non-archival blocks between the most recent TXO commitment and the pending TXO MMR tip, we have to store inner nodes - on the order of a few dozen MB even when n is a seemingly ridiculously high year worth of blocks.

Archived txout spends on the other hand can invalidate TXO MMR proofs at any level - consider the case of two adjacent txouts being spent. To guarantee success requires storing full proofs. Of course, a TXO commitment delay of a year sounds ridiculous. Full nodes would be forced to compute the commitment from scratch, in the same way they are forced to compute the UTXO state, or total work.

A more pragmatic approach is to accept that people will do that anyway, and instead assume that sufficiently old blocks are valid.

That leaves public attempts to falsify TXO commitments, done out in the open by the majority of hashing power. With this in mind, a longer-than-technically-necessary TXO commitment delay 7 may help ensure that full node software actually validates some minimum number of blocks out-of-the-box, without taking shortcuts.

Can a TXO commitment scheme be optimized sufficiently to be used directly without a commitment delay? Is it possible to use a metric other than age, e. For instance it might be reasonable for the TXO commitment proof size to be discounted, or ignored entirely, if a proof-of-propagation scheme e.

How does this interact with fraud proofs? Pettycoin Revisted Part I: Do we really need a mempool? Checkpoints that reject any chain without a specific block are a more common, if uglier, way of achieving this protection. A good homework problem is to figure out how the TXO commitment could be designed such that the delay could be reduced in a soft-fork.